Patterns in data often reveal more than meets the eye – predicting trends, optimizing decisions, and even mapping real-world behaviors. BigQuery’s built-in functions unlock these insights, making predictive modeling and geospatial analysis more accessible with SQL.

This article explores five essential BigQuery functions along with their practical applications. By leveraging these tools, organizations can enhance decision-making, optimize strategies, and gain a deeper understanding of both structured and location-based data.

BigQuery ML allows users to create, train, and run machine learning models using SQL, making predictive analytics accessible without requiring advanced ML knowledge. It enables businesses to build models, make predictions, and evaluate performance directly within BigQuery, simplifying AI-driven decision-making.

BigQuery’s geography functions analyze spatial data using the GEOGRAPHY data type. These functions help measure distances, determine spatial relationships, and manipulate geographic features. By combining BigQuery ML and geospatial analytics, organizations can unlock valuable insights for predictive modeling and location-based analysis.

Understanding key BigQuery functions enhances predictive modeling and geospatial analysis. This section explores ML.PREDICT, ML.EVALUATE, ML.RECOMMENDATIONS, ST_UNION, and ST_DISTANCE to simplify forecasting, recommendations, and spatial computations using SQL.

We will use an e-commerce dataset to showcase the usage of each function.

The ML.PREDICT function in BigQuery allows users to make predictions using trained machine learning models without additional infrastructure. It supports various models, including regression, deep neural networks, decision trees, and imported models like TensorFlow and XGBoost. Predictions can be run during or after model creation.

1ML.PREDICT(

2 MODEL `project_id.dataset.model_name`,

3 (SELECT column1, column2 FROM `project_id.dataset.input_table`)

4)

Here:

An e-commerce company wants to predict whether a customer will likely churn based on their purchase frequency, average spending, and browsing behavior. Using a pre-trained churn prediction model, ML.PREDICT can estimate the probability of churn for each customer.

Syntax:

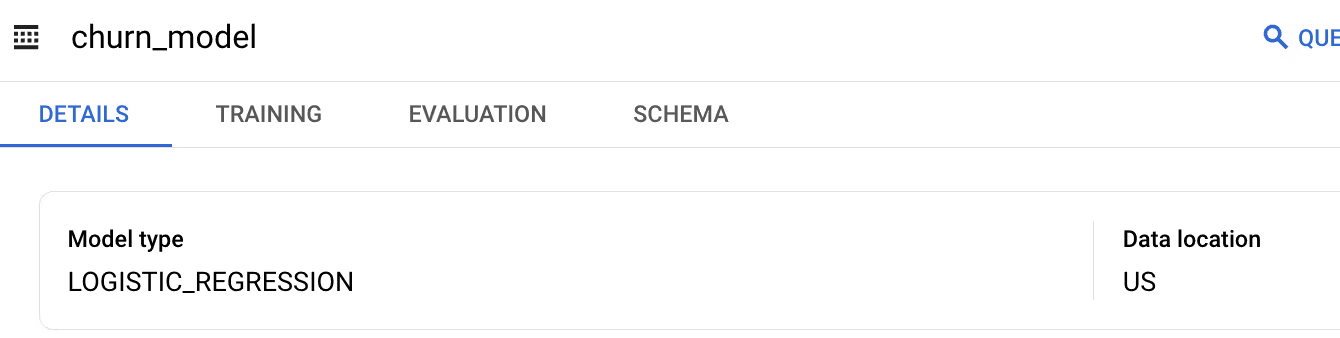

Before using ML.PREDICT, you need to train a model. This model predicts whether a customer will churn based on their order history.

1CREATE MODEL `owox-d-ikrasovytskyi-001.OWOX_Demo.churn_model`

2OPTIONS(

3 model_type = 'logistic_reg', -- Logistic Regression for binary classification

4 input_label_cols = ['Churn'], -- The column to predict

5 auto_class_weights = TRUE, -- Automatically balances class weights

6 data_split_method = 'RANDOM', -- Splits data randomly

7 data_split_eval_fraction = 0.2 -- Uses 20% of data for evaluation

8) AS

9SELECT

10 CustomerID,

11 Purchase_Frequency,

12 AvgOrderValue,

13 BrowsingTime,

14 Churn

15FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Ecommerce_Dataset`;

Then you can use the following query to predict churn.

1SELECT

2 CustomerID,

3 predicted_Churn AS Churn_Probability

4FROM ML.PREDICT(

5 MODEL `owox-d-ikrasovytskyi-001.OWOX_Demo.churn_model`,

6 (

7 SELECT

8 CustomerID,

9 TotalOrders AS Purchase_Frequency,

10 AvgOrderValue,

11 BrowsingTime

12 FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Ecomm_dataset_Without_Churn`

13 )

14);

Here:

This query helps identify customers at risk of leaving, allowing businesses to take proactive retention measures.

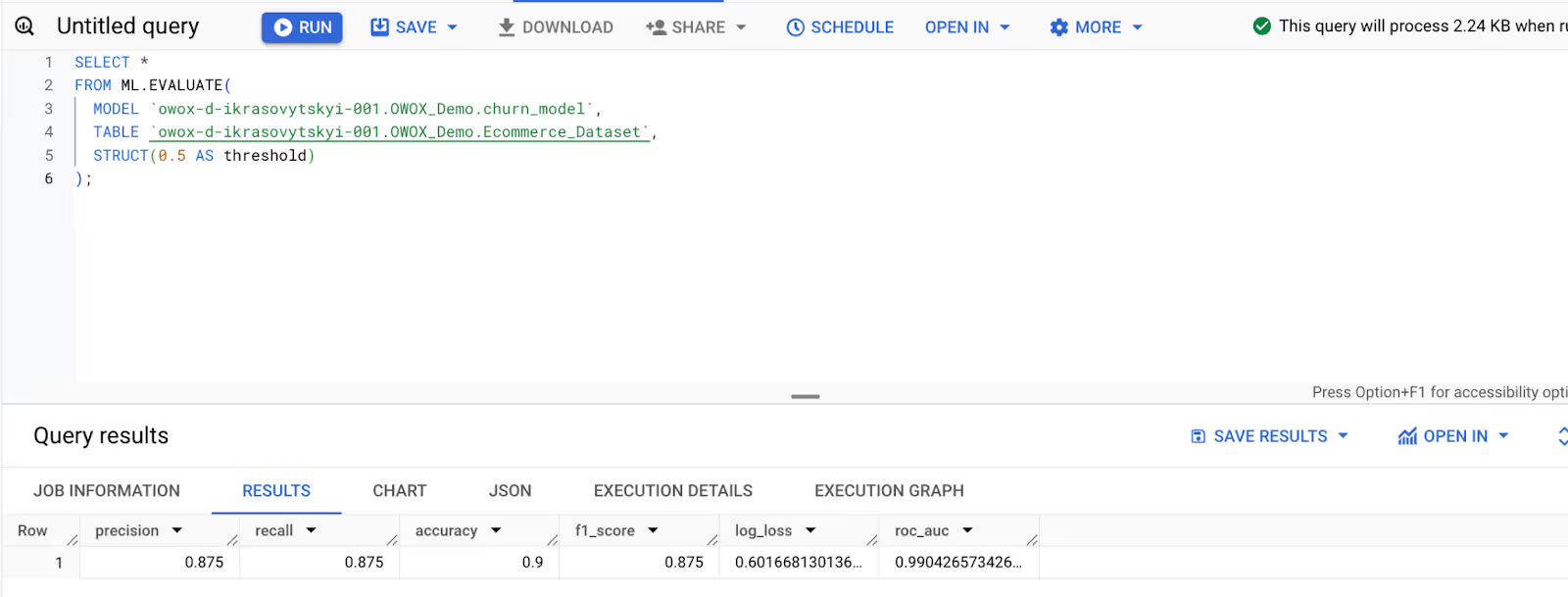

The ML.EVALUATE function in BigQuery assesses a model’s performance by calculating key metrics like accuracy, precision, recall, and RMSE. It helps determine how well a trained model predicts outcomes.

1SELECT *

2FROM ML.EVALUATE(

3 MODEL `project_id.dataset_name.model_name`,

4 TABLE `project_id.dataset_name.input_table`,

5 STRUCT(threshold_value AS threshold)

6);

Here:

An e-commerce company wants to evaluate the performance of a machine learning model that predicts whether a customer will make a purchase based on their past interactions, total spending, and frequency of purchases. The evaluation will provide metrics like accuracy, precision, and recall to assess the model's effectiveness.

Syntax:

1SELECT *

2FROM ML.EVALUATE(

3 MODEL `owox-d-ikrasovytskyi-001.OWOX_Demo.churn_model`,

4 TABLE `owox-d-ikrasovytskyi-001.OWOX_Demo.Ecommerce_Dataset`,

5 STRUCT(0.5 AS threshold)

6);

Here:

This result helps businesses assess the model’s reliability in predicting customer purchases, enabling better targeting and personalized marketing strategies.

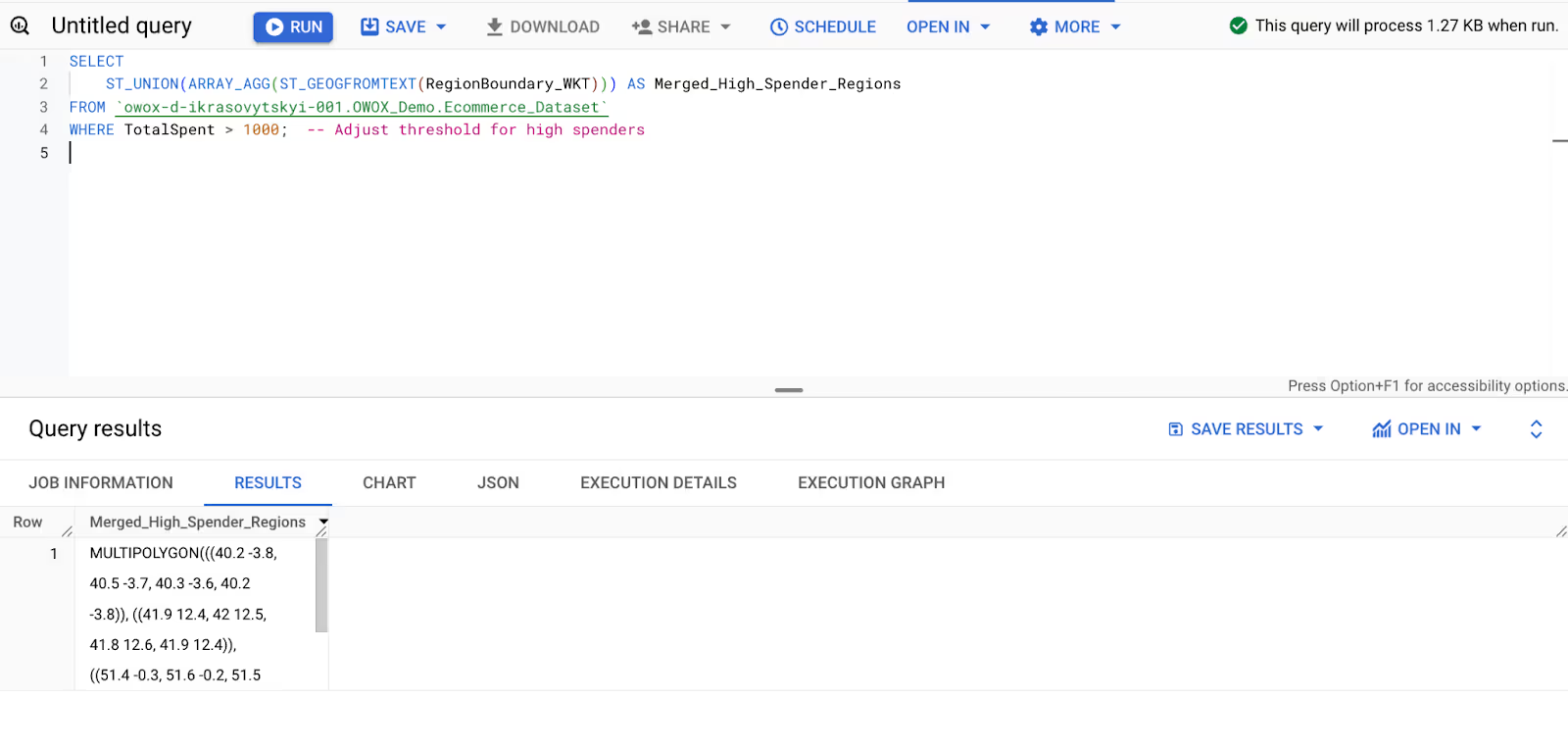

The ST_UNION function in BigQuery merges multiple geographic boundaries into a single shape. It is useful for aggregating spatial data, such as combining district boundaries for urban planning or regional analysis.

1SELECT ST_UNION(geography_column)

2FROM `project_id.dataset.geospatial_table`

Here:

An e-commerce company wants to merge region boundaries for specific customer groups, such as high spenders, into a single area. They use the ST_UNION function to combine these boundaries for better analysis and decision-making.

Syntax:

1SELECT

2 ST_UNION(ARRAY_AGG(ST_GEOGFROMTEXT(RegionBoundary_WKT))) AS Merged_High_Spender_Regions

3FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Ecommerce_Dataset`

4WHERE TotalSpent > 1000; -- Adjust threshold for high spenders

Here:

This result helps businesses visualize and analyze geographic regions with high-spending customers for targeted campaigns.

The ST_DISTANCE function in BigQuery calculates the distance between two geographic points. It is useful for measuring proximity between locations, such as customer addresses and store locations, to optimize logistics and market analysis.

1SELECT ST_DISTANCE(geography_point1, geography_point2)

2FROM `project_id.dataset.geospatial_table`

Here:

An e-commerce company wants to analyze customer proximity to its stores to identify underserved areas. The ST_DISTANCE function calculates the distance between each customer location and the nearest store.

Syntax:

1SELECT

2 CustomerID,

3 ST_DISTANCE(

4 ST_GEOGPOINT(CustomerLon, CustomerLat),

5 ST_GEOGPOINT(StoreLon, StoreLat)

6 ) AS Distance_Meters

7FROM `owox-d-ikrasovytskyi-001.OWOX_Demo.Ecommerce_Dataset`

8ORDER BY CustomerID, Distance_Meters;

Here:

The result provides distance insights, helping businesses identify underserved regions and optimize store placements.

While BigQuery ML and geography functions simplify data analysis, users often face issues like model errors, schema mismatches, and spatial inconsistencies. Understanding these challenges helps ensure accurate predictions and geospatial insights.

⚠️ Error:

In BigQuery, the ML.PREDICT function fails when the specified model does not exist in the dataset or project. This issue also affects ML.EVALUATE and ML.RECOMMENDATIONS, as they rely on existing models. Errors typically occur due to incorrect model names, missing datasets, or models stored in different locations.

✅ Solution:

To check if a model has been created or not, do the following-

⚠️ Error:

BigQuery’s ML.PREDICT function fails when the input data schema does not match the training data schema. This happens when feature columns are missing, have different data types, or are incorrectly formatted. Schema mismatches can lead to errors across other ML functions like ML.EVALUATE and ML.RECOMMENDATIONS.

✅ Solution:

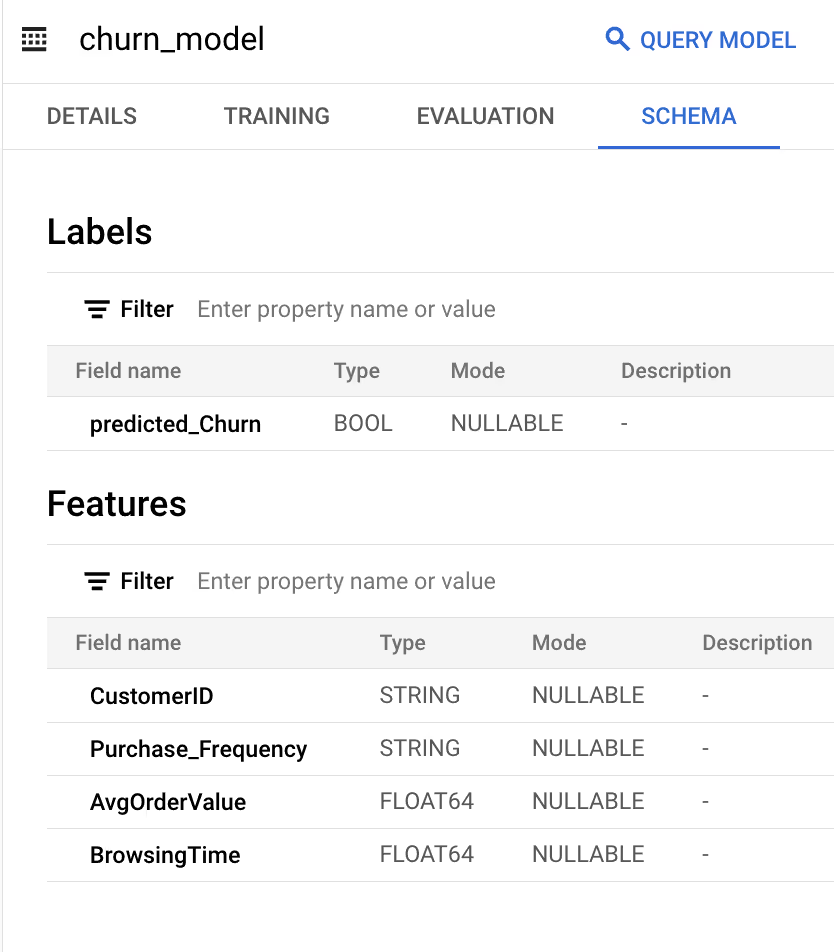

By clicking on the model name in the “Explorer” pane, you can click on “Schema” to validate the data type.

⚠️ Error:

Missing or incorrectly formatted labels in the evaluation dataset can cause ML.EVALUATE to fail. If the label column is absent, contains NULL values, or is misnamed, BigQuery cannot compute essential evaluation metrics like accuracy and recall. Without a valid label column, assessing model performance becomes impossible.

✅ Solution:

Ensure the evaluation dataset includes a properly named label column with no NULL values. Verifying label consistency before running ML.EVALUATE prevents errors and ensures accurate model assessment.

⚠️ Error:

BigQuery’s ML.EVALUATE function fails when evaluation metrics do not match the model type. This issue arises when regression metrics like RMSE are applied to classification models or when classification metrics like precision and recall are used for regression models. Such mismatches lead to inaccurate or meaningless evaluations.

✅ Solution:

Ensure that the evaluation metrics align with the model type. Use classification metrics (e.g., accuracy, precision, recall) for classification models and regression metrics (e.g., RMSE, MAE) for regression models to get meaningful results.

⚠️ Error:

ML.RECOMMENDATIONS in BigQuery struggles when the dataset contains sparse or incomplete interaction data. Limited user-product interactions reduce the effectiveness of collaborative filtering, leading to poor-quality recommendations. Sparse data can cause the model to generate inaccurate or generic suggestions, impacting user engagement and personalization.

✅ Solution:

Enhance the dataset by collecting more interaction data, such as purchase history, clicks, or user ratings. Applying data augmentation techniques or incorporating external behavioral data can improve recommendation accuracy and relevance.

⚠️ Error:

The cold start problem in ML.RECOMMENDATIONS occurs when new users or items lack historical interaction data. Without prior purchases, clicks, or ratings, the model struggles to generate personalized recommendations. This results in limited or irrelevant suggestions, reducing user engagement and the effectiveness of the recommendation system.

✅ Solution:

Use popularity-based recommendations for new users and content-based filtering for new items. Gradually incorporate user interactions to improve personalization. Hybrid models combining collaborative and content-based approaches help mitigate cold start issues.

⚠️ Error:

The ST_UNION function in BigQuery fails when the dataset contains NULL or invalid geometries. If any geometry is improperly formatted, missing, or self-intersecting, the function cannot merge geographic shapes, leading to errors in spatial analysis. This issue is common when working with large or inconsistent geospatial datasets.

✅ Solution:

Before applying ST_UNION, filter out NULL or invalid geometries. Cleaning the dataset ensures smooth geospatial operations.

⚠️ Error:

Handling large datasets with ST_UNION in BigQuery can lead to slow query performance due to the complexity of merging multiple geographic shapes. As the dataset size increases, the function requires more computational resources, resulting in longer execution times. This challenge makes real-time spatial analysis difficult and inefficient.

✅ Solution:

To improve performance, filter or aggregate data before applying ST_UNION. Splitting large datasets into smaller subsets or leveraging spatial indexing techniques can help minimize computational costs and speed up query execution.

⚠️ Error:

The ST_DISTANCE function in BigQuery fails or returns inaccurate results when the input geometries are of different types. For example, if one geometry is a point and the other is a polygon, the function cannot compute the distance correctly. Mismatched geometry types lead to inconsistencies in spatial analysis and incorrect calculations.

✅ Solution:

Ensure both geometries are of the same type before using ST_DISTANCE. Convert polygons to points using ST_CENTROID() or verify geometry consistency using ST_GEOMETRY_TYPE(). Standardizing input types improves accuracy and prevents errors.

⚠️ Error:

Spatial Reference ID (SRID) mismatches occur when geometries in BigQuery have different coordinate systems. Since ST_DISTANCE, ST_UNION, and other geospatial functions require consistent SRIDs, using mismatched references leads to inaccurate calculations or query failures. This issue arises when datasets are sourced from different geographic systems.

✅ Solution:

To prevent formatting issues, use a function that ensures standards-compliant output. If exporting from PostGIS, apply the ST_MakeValid function to standardize the data. Alternatively, import the data as text and convert it using ST_GEOGFROMTEXT or ST_GEOGFROMGEOJSON with the make_valid parameter set to TRUE, which attempts to repair invalid polygons.

To identify or bypass improperly formatted spatial data, use the SAFE function prefix.

The following query retrieves invalid GeoJSON entries in a dataset:

1SELECT

2 <PrimaryKeyColumn>,

3 <WKTColumn> AS invalid_wkt

4FROM

5 `<your_project>.<your_dataset>.<your_table>`

6WHERE

7 <WKTColumn> IS NOT NULL

8 AND SAFE.ST_GeogFromText(<WKTColumn>) IS NULL;BigQuery provides a variety of functions that help users efficiently manage, transform, and analyze large datasets. These functions allow seamless data manipulation, making complex queries more accessible and powerful.

By leveraging these BigQuery functions, users can streamline data management, enhance query performance, and derive actionable insights for better decision-making.

The OWOX Reports enhances reporting capabilities by making data analysis more efficient and accessible. It enables users to generate insightful reports without the need for complex SQL queries, simplifying data-driven decision-making for businesses.

By leveraging this extension, data professionals can optimize queries, streamline reporting workflows, and gain deeper insights from BigQuery data.

Functions like APPROX_QUANTILES, ARRAY_AGG, SAFE_CAST, and LAG/LEAD are often overlooked. These functions help in faster approximations, handling errors gracefully, and improving analytical queries for trend analysis and aggregations.

BigQuery ML functions like ML.PREDICT, ML.EXPLAIN_FORECAST, and ML.TRANSFORM automates machine learning workflows, reducing manual effort. They enable scalable model training, feature engineering, and predictive analysis using SQL.

Yes, functions like ML.DECISION_TREE, ML.TRANSFORM, and ML.WEIGHTS help with model interpretation, feature transformation, and weight analysis, making complex ML tasks more accessible within BigQuery.

BigQuery geography functions help in distance calculations (ST_DISTANCE), spatial joins (ST_INTERSECTS), and area aggregation (ST_UNION). They are widely used in logistics, urban planning, and market segmentation.

Use ML.FEATURE_INFO to check feature consistency, ML.WEIGHTS for model insights, and SAFE_CAST to handle data type mismatches. Reviewing input data schemas ensures smoother transformations.

Filter or aggregate data before applying ST_UNION, process smaller batches, and use spatial indexing techniques to optimize performance. Pre-validating geometries can also reduce unnecessary computations.