Imagine running a business where sales, customer, and product data are scattered across multiple systems. Every time analysts try to generate reports, they struggle with redundant information, inconsistent entries, and sluggish query performance. The more data grows, the harder it becomes to extract meaningful insights efficiently.

This is where the Snowflake Schema comes in. It organizes data into structured, normalized tables, optimizes storage, reduces redundancy, and ensures data integrity. But is it the right choice for your business?

In this guide, we’ll explore the architecture, advantages, and real-world applications of the Snowflake Schema.

A Snowflake Schema is a multidimensional data model that extends the Star Schema by normalizing dimension tables into sub-dimensions. This structure reduces data redundancy and enhances storage efficiency.

Commonly used in OLAP data warehouses, data marts, and relational databases, it supports business intelligence, reporting, and scalable analytics.

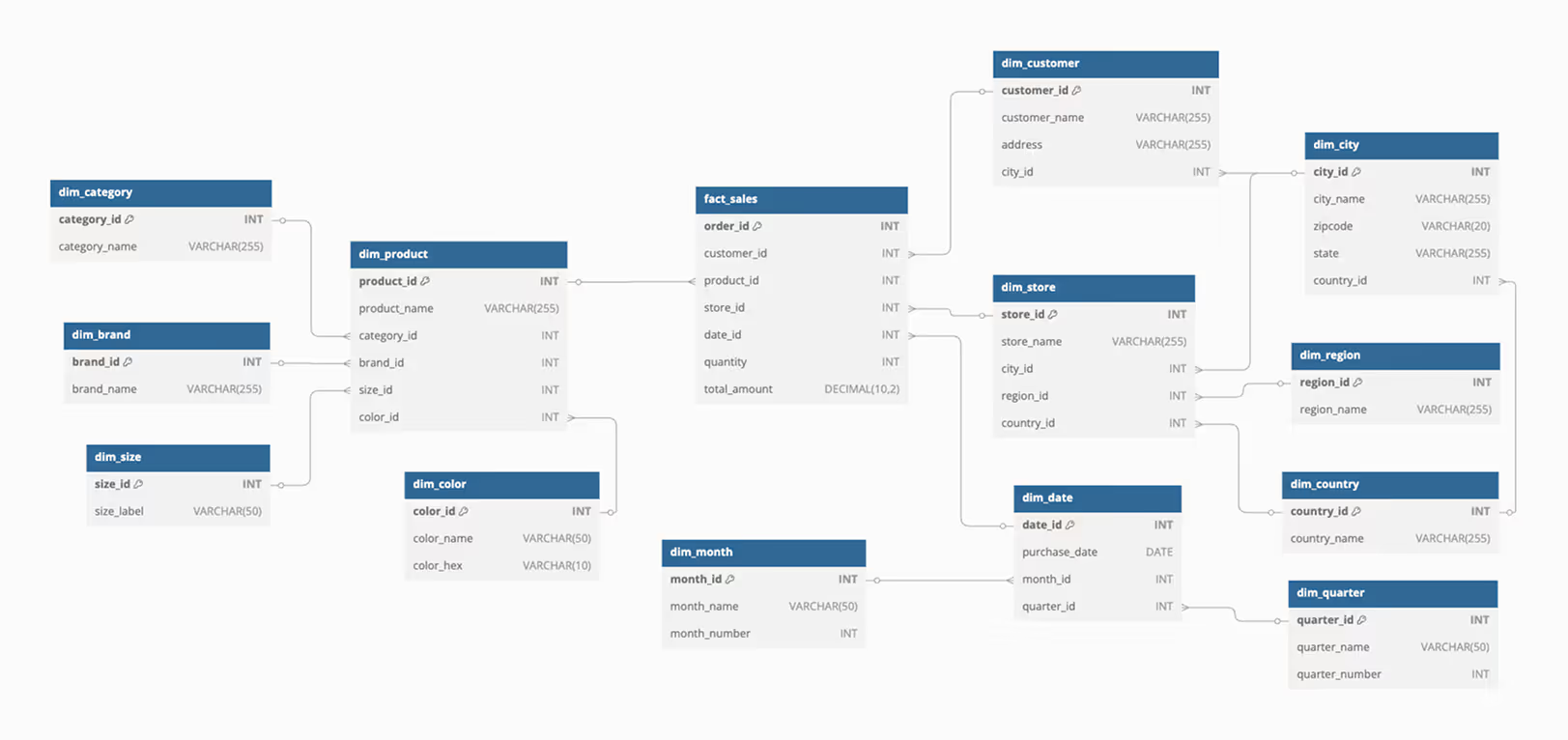

In a sales-focused Snowflake Schema, dimension tables are further normalized to reduce data redundancy and improve storage efficiency.

Instead of having all attributes stored in a single table, related attributes are split into sub-dimensions, maintaining a more structured approach to data organization. This setup enables efficient reporting, trend analysis, and business intelligence.

A Snowflake Schema consists of multiple layers that work together to ensure efficient data storage, processing, and governance. These layers play a crucial role in optimizing query performance, scalability, and data integrity.

The compute layer in Snowflake is responsible for executing queries and handling data processing tasks. It is independent of storage, allowing users to scale processing power up or down based on workload demands. Snowflake achieves this through virtual warehouses, which are dedicated compute clusters that can run multiple queries simultaneously.

For example, a retail company must generate a real-time sales report during peak shopping hours. Scaling up its virtual warehouse ensures that high-volume queries run efficiently without affecting other business processes. Once the workload decreases, the warehouse can be scaled down to reduce costs.

The storage layer manages structured and semi-structured data, efficiently storing it in cloud-based storage systems like Amazon S3, Google Cloud Storage, or Azure Blob Storage. Snowflake’s multi-cluster, shared data architecture ensures that data remains centralized while allowing multiple workloads to access it concurrently.

Suppose a company tracks customer transactions, product inventory, and supplier data from different regions. Snowflake’s centralized storage ensures that all departments access a single source of truth, enabling consistent reporting across the organization.

The metadata layer stores essential details about databases, schemas, tables, and query history. It enables query optimization, access control, and data governance by maintaining system-wide metadata. This layer is crucial for managing data lineage, indexing, and query execution plans.

Imagine a retail company that needs to generate year-end sales reports for financial analysis and compliance audits. The metadata layer maintains a query history log, allowing analysts to track modifications, validate sales data accuracy, and ensure consistency in reports.

Virtual warehouses are Snowflake’s on-demand compute clusters, designed to process queries and execute data transformations. These warehouses operate independently, meaning different teams can run workloads without affecting each other. They can be scaled up or down dynamically, allowing businesses to balance cost and performance.

Suppose a marketing team is running a customer segmentation analysis while the finance team processes monthly revenue reports. Separate virtual warehouses allow both teams to run large queries simultaneously without slowing each other down.

Snowflake’s secure data-sharing feature allows organizations to share datasets with external partners or internal teams without duplicating data. By granting direct access, users can collaborate across different accounts while maintaining data security and governance.

This approach reduces ETL (Extract, Transform, Load) processes, minimizes storage costs, and ensures that all users work with real-time, consistent data.

Suppose a retail company shares sales performance data with a third-party analytics firm for customer behavior analysis. Instead of duplicating large datasets, Snowflake’s data-sharing feature allows analysts to access live data in real-time,

Security is a core component of Snowflake’s architecture. It offers end-to-end encryption, role-based access control (RBAC), and audit logging to protect data from unauthorized access. Snowflake also ensures compliance with industry regulations such as GDPR, HIPAA, and SOC 2.

For instance, a financial services company needs to generate regulatory compliance reports while ensuring data security. Using role-based access control (RBAC), analysts can only access authorized financial data, while audit logs track report generation and access history.

Creating a Snowflake Schema involves strategic structuring to enhance storage efficiency, boost query performance, and ensure data integrity. Let’s explore the key design guidelines that help achieve a scalable and well-organized data model.

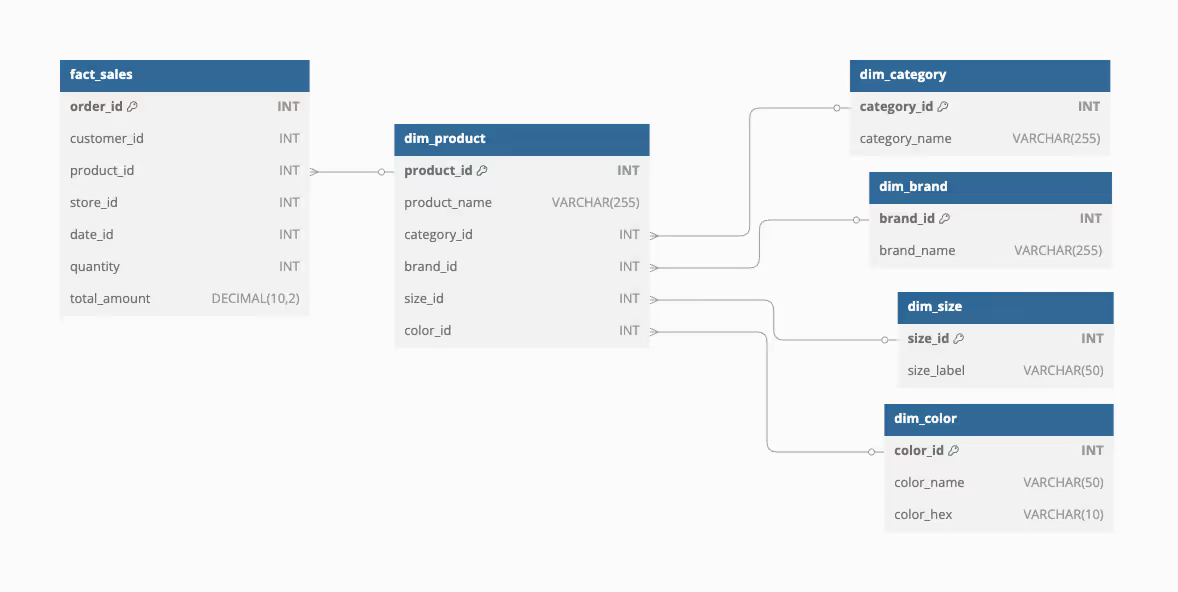

Normalization in a Snowflake Schema breaks down large dimension tables into smaller, related tables, reducing redundancy and improving data consistency. By structuring data hierarchically, businesses can maintain efficient storage and ensure data accuracy.

Example:

A Product Dimension table stores ProductID, CategoryID, BrandID, SizeID, and ColorID. Instead of storing repeated category names in every row, CategoryID links to a separate Category Table, reducing duplication and ensuring consistent classification across all product records.

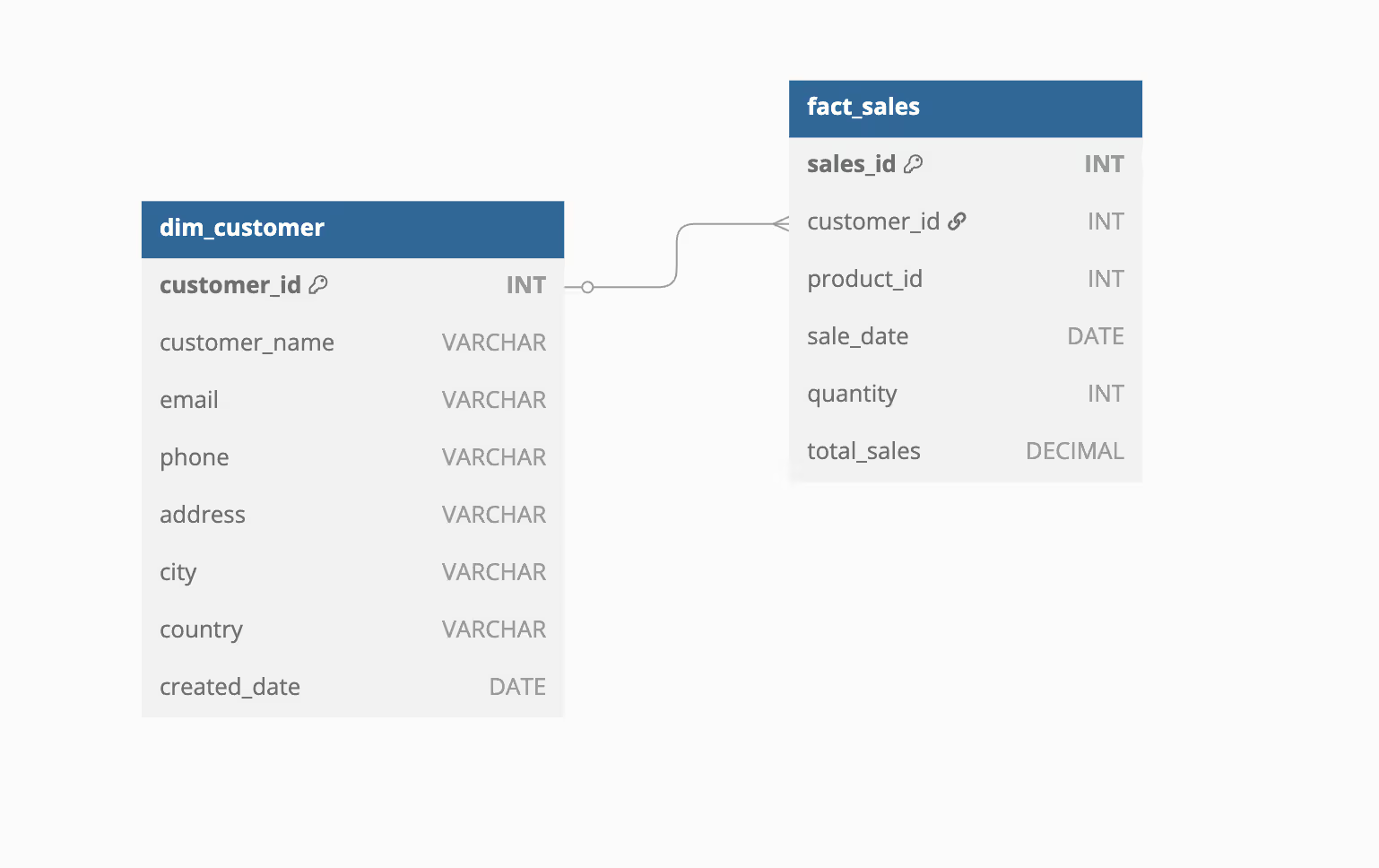

Using surrogate keys (unique integer identifiers) instead of natural keys (like email addresses or product names) enhances data consistency, query performance, and scalability. Surrogate keys remain unchanged even if business rules evolve, preventing cascading updates across tables.

Example:

A Customer Dimension table includes a CustomerID (surrogate key) instead of using an email address as the primary key. This ensures faster joins when linking to a Sales Fact Table for analysis, enabling businesses to track purchase trends without worrying about changes to customer emails.

Granularity defines the level of detail stored in a fact table, impacting query efficiency and reporting accuracy. Choosing the right granularity ensures data is neither too detailed (slowing performance) nor too aggregated (limiting insights).

Example:

A Sales Fact Table records transactions at the daily level, including ProductID, CustomerID, StoreID, DateID, Quantity, and Total Sales. This setup allows analysts to track daily revenue trends, measure store performance, and drill down into product-level insights for more detailed decision-making.

Conformed dimensions are shared dimensions used across multiple fact tables, ensuring data consistency and accuracy in reporting. They enable different business processes to use the same reference data, allowing for seamless comparison and aggregation across multiple datasets.

Example:

Let's assume there are two fact tables:

Both tables require product details for analysis, but instead of storing product attributes separately, they use a shared Product Dimension Table (dim_product). This conformed dimension ensures that product names, categories, and brand details remain consistent across both sales and inventory reports.

In modern data warehousing, maintaining accuracy, efficiency, and scalability is crucial for handling large datasets. Let’s explore the key advantages of using a Snowflake Schema in data modeling.

The Snowflake Schema enforces data consistency by breaking down large dimension tables into smaller, related tables. This structured approach reduces data anomalies, inconsistencies, and duplication, ensuring high-quality, reliable datasets for analysis.

Since the Snowflake Schema normalizes dimension tables, it reduces redundant data storage, leading to smaller disk space requirements. By structuring data into hierarchical layers, only necessary attributes are stored, optimizing query performance and storage costs.

The Snowflake Schema minimizes data redundancy by normalizing dimension tables into smaller, related tables, ensuring efficient storage and improved data integrity. Unlike denormalized schemas, where duplicate data is stored multiple times, the Snowflake Schema eliminates unnecessary repetition.

The Snowflake Schema provides a scalable and adaptable data model, making it easier to integrate new dimensions or fact tables as business needs evolve. Its normalized structure allows for seamless schema modifications without affecting existing data relationships.

Both snowflake and Star Schemas are widely used in data warehousing, but they differ significantly in structure, performance, storage efficiency, and flexibility. Below, we compare their key differences.

The Star Schema has a centralized fact table linked directly to denormalized dimension tables, making it simpler and easier to design. In contrast, the Snowflake Schema normalizes dimension tables into multiple hierarchical layers, reducing redundancy but increasing complexity.

While the Star Schema is better suited for straightforward analytics, the Snowflake Schema provides greater data organization for large-scale data models.

In terms of query speed, the Star Schema generally performs faster because it involves fewer joins, making it more efficient for OLAP queries and reporting. The Snowflake Schema, with its multiple joins between normalized tables, may have slower query performance but offers better data integrity. Performance trade-offs depend on whether query speed or data accuracy is the priority.

A Star Schema requires more storage space since it stores repeated dimension data within its tables. In contrast, the Snowflake Schema reduces data redundancy by storing reference attributes in separate sub-dimension tables, making it more space-efficient. This advantage is particularly useful in cloud-based storage systems, where minimizing storage costs is a priority.

The Star Schema is ideal for small to medium-sized data warehouses, offering simpler reporting and faster queries with denormalized dimensions. In contrast, the Snowflake Schema is best for large-scale data warehouses, ensuring data consistency, flexibility, and storage efficiency by normalizing dimension tables.

The Snowflake Schema is widely used in enterprise data management, particularly where data consistency, structured hierarchies, and storage efficiency are essential.

Below are key use cases across different business functions:

Let's look at a real-world application:

A multinational corporation needs a financial reporting system that tracks revenue, expenses, and regulatory compliance across multiple subsidiaries. The Snowflake Schema ensures accurate historical tracking, reducing data duplication while maintaining audit trails and risk assessments.

Objects Used:

Suppose a B2B software company needs to analyze customer interactions, sales performance, and retention rates. The Snowflake Schema enables the structured tracking of customer behaviors, purchase history, and sales team activities while ensuring consistent customer data across departments.

Objects Used:

Imagine a global retail company that needs an inventory reporting tool to monitor stock levels, supplier performance, and warehouse operations. The Snowflake Schema structures product hierarchies, warehouse locations, and supplier data, ensuring real-time stock tracking across multiple warehouses.

Objects Used:

For Example, A digital marketing agency needs to track advertising performance, campaign effectiveness, and customer engagement across multiple channels. The Snowflake Schema helps segment marketing data by different dimensions, ensuring a detailed ROI analysis.

Objects Used:

While the Snowflake Schema enhances data integrity and reduces redundancy, it comes with certain challenges that can impact storage efficiency, query performance, and schema complexity. Below are the key limitations and recommended solutions to overcome them.

⚠️ Challenge: Snowflaking reduces the dimension table size by eliminating redundancy, but the overall storage savings in a data warehouse is often negligible. The additional complexity in joins can outweigh the benefits of reduced disk space.

✅ Solution: Only normalize dimension tables when necessary, prioritizing query performance over storage optimization. Use denormalized dimensions for frequently queried data to minimize expensive joins and lookups.

⚠️Challenge: Over-normalization can increase query complexity by adding unnecessary joins, leading to slower performance. Normalizing every dimension without purpose results in poor usability and longer response times.

✅ Solution: Avoid snowflaking dimensions unless strict normalization is required for business rules or governance. Keep frequently accessed attributes within the primary dimension table for faster lookups.

⚠️Challenge: Breaking hierarchies into separate dimension tables adds unnecessary joins, degrading query performance. Instead, hierarchies should reside within a single dimension to maintain query speed and readability.

✅ Solution: Store hierarchical relationships within a single dimension table, ensuring optimized aggregations for reporting. Use predefined rollups where necessary to facilitate faster analysis.

⚠️Challenge: Poorly designed hierarchies can lead to duplicated aggregation logic, affecting accuracy and maintainability. Storing data at higher levels may limit granular insights, leading to data inconsistencies in reports.

✅ Solution: Always design hierarchies at the lowest possible detail, such as individual transactions or SKU-level sales. This allows for flexible aggregation and consistent roll-up calculations across reports.

Implementing a Snowflake Schema effectively requires careful planning, normalization, and ongoing maintenance. By following best practices, businesses can enhance query performance, maintain data integrity, and ensure scalability.

Before designing the schema, it is crucial to understand the specific business objectives it needs to support. Clearly defining key metrics, reporting needs, and analytical goals ensures that the schema aligns with organizational priorities.

Fact tables store business transactions, while dimension tables provide descriptive attributes for analysis. The proper identification of these elements ensures that data relationships are well-defined.

A well-structured schema ensures that fact tables contain only numerical and measurable data, while dimension tables store descriptive details. Using surrogate keys in dimension tables improves query performance and maintains consistency across different data sources.

Normalization reduces data redundancy and improves consistency by breaking down dimension tables into smaller, related tables. While normalization enhances data integrity, excessive normalization can lead to performance issues due to complex joins.

Conformed dimensions enable data consistency across multiple fact tables, allowing for unified reporting across departments. Standardizing dimension tables ensures that different analytical processes use the same reference data, eliminating discrepancies.

Thorough testing is essential to ensure that the schema meets business requirements and supports efficient querying. Validating relationships between tables, running performance tests, and checking for data consistency help prevent errors. Regular testing ensures that the schema functions as expected and can handle real-world workloads.

As business requirements evolve, the schema should be reviewed and updated regularly. Monitoring query performance, optimizing indexes, and restructuring tables when necessary, ensure that the schema remains efficient. Keeping the schema aligned with changing data needs enhances its long-term usability and scalability.

Designing and maintaining a Snowflake Schema requires balancing normalization, performance, and clarity across multiple linked tables.

With OWOX Data Marts, analysts can model complex relationships directly in their data warehouse, define joins between fact and dimension tables, and document every relationship for reuse across dashboards.

Instead of rewriting SQL or duplicating logic, you can manage a governed schema once and deliver consistent data to Sheets, or Looker Studio.

A Snowflake Schema normalizes dimension tables into smaller related tables, reducing redundancy, while a Star Schema keeps denormalized dimensions, improving query performance with fewer joins but increasing data redundancy.

A Snowflake Schema improves data integrity, reduces redundancy, optimizes storage, and enhances flexibility for complex queries. It ensures structured data organization, enabling efficient data retrieval and scalability in large data warehouses.

Use a Snowflake Schema for large-scale, complex data warehouses requiring structured hierarchies, reduced redundancy, and efficient storage where query performance is secondary to maintaining data integrity and consistency across dimensions.

A Snowflake Schema increases query complexity and requires more joins, leading to slower performance. It also adds maintenance overhead, making it harder to manage compared to simpler, denormalized Star Schemas.

By eliminating redundant data and structuring dimensions hierarchically, a Snowflake Schema reduces storage requirements and improves data integrity, enabling optimized filtering, indexing, and aggregation for specific analytical workloads.

While possible, a Snowflake Schema is less efficient for real-time analytics due to multiple joins slowing query speed. Real-time use cases prefer denormalized models like the Star Schema for faster execution.

The key components include fact tables (storing transactions), dimension tables (descriptive attributes), hierarchical sub-dimensions (normalized data), and metadata layers for query optimization, indexing, and access control.